Research

Research Vision

My goal is to help improve the lives of those with disabilities through the creation of biomedical technologies that will help them reach their desired level of functional restoration while also respecting their autonomy and dignity. In particular, my research interests lie in developing devices that interface with and speak the natural language of the nervous system: neuronal spiking activity. I want to work towards this goal as a professor at an academic research institution doing research in neuromorphic engineering. My current postdoctoral research focuses on sensorimotor BCIs and my Ph.D. research focused on sensory feedback for upper limb prostheses, but I am broadly interested in any neural interface device that aims to seamlessly integrate with the human body.

This field is exciting for me because it provides an opportunity to pursue multiple fascinating and impactful avenues of exploration.

- It allows me to build systems that can fundamentally improve the lives of people who have been impacted by injuries or disorders that have led to loss of limbs or paralysis (in the future I might work on other systems as well, for example, bionic vision).

- I learn, gain insights, and discover the mechanisms and processing underlying the most complex and extraordinary structure known to humankind: the nervous system. For instance, how does the embodied brain measure and interpret the environment around it? Or, how does the brain generalize from experience so that it is able to perform well in a variety of novel contexts? What about the architecture of the brain makes it so power efficient and robust to noise? Neural interfaces provide a unique opportunity to explore these topics.

- Finally, based on those neuroscientific insights, I can develop novel artificial devices inspired by neural processing. These devices (such as sensors or robotics) potentially can outperform the current state-of-the-art technology in terms of functionality, adaptability, and power consumption.

These three avenues represent the pillars of my research interests: devices for clinical translation, mechanisms of neural processing, and neuro-inspired technological systems. These pillars are all built on the foundation of models of spiking activity that mimic our latest understanding of the nervous system.

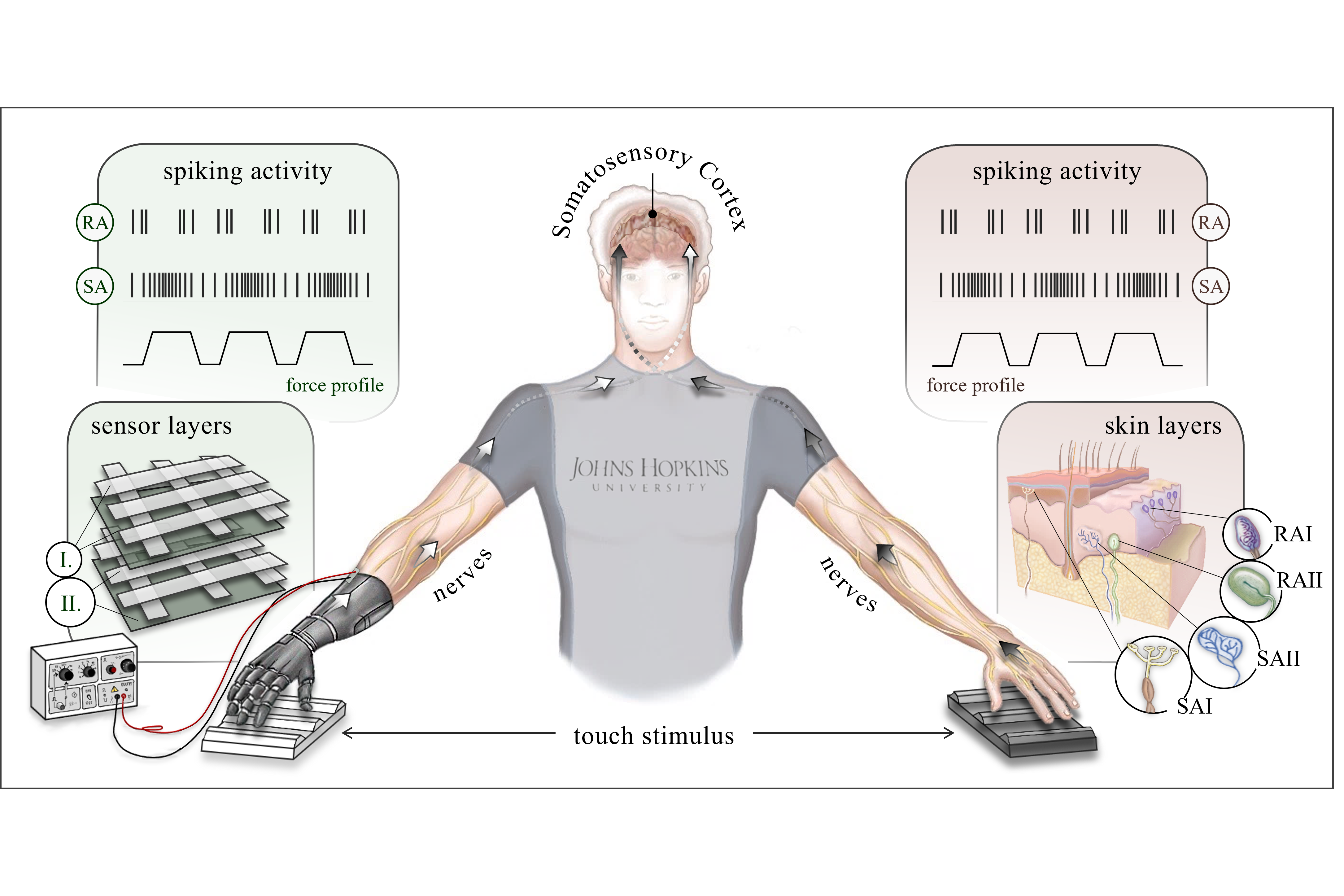

Neuromorphic Encoding of Tactile Stimuli

This research project was the core focus of my doctoral research. I explored the use of biomimetic processing techniques to replicate the encoding and compression of tactile information as neuron-like spiking activity found in biology. I validate the models through classification of different textures. I developed a preliminary encoding scheme that transformed analog tactile sensor readings into spiking activity using the Izhikevich neuron model. This scheme mimicked the activity of slowly adapting (SA) and rapidly adapting (RA) mechanoreceptors in skin which respond to sustained and dynamic aspects of tactile events, respectively. I then added encoding algorithms to make scanning speed and contact force invariant spiking representations to match the perceptual invariance of our daily experience. The invariant representations have higher classification accuracy, improved computational efficiency, and increased capability to identify textures explored in novel speed-force conditions. The speed invariance algorithm was adapted to a real-time human-operated texture classification system and showed similar benefits. These results show that invariant neuromorphic representations enable superior performance in neurorobotic sensing systems. Additionally, because the neuromorphic representations are grounded in biological processing, this work could serve as the basis for naturalistic sensory feedback for upper limb amputees.

Related publications

-

M. M. Iskarous, Z. Chaudhry, F. Li, S. Bello, S. Sankar, A. Slepyan, N. Chugh, C. L. Hunt, R. Greene, and N. V. Thakor, “Invariant neuromorphic representations of tactile stimuli improve robustness of a real-time texture classification system,” Advanced Intelligent Systems, p. 2401078, 2025, doi: 10.1002/aisy.202401078.

-

Z. Chaudhry, F. Li, M. Iskarous, and N. V. Thakor, “An automated tactile stimulator aparatus for neuromorphic tactile sensing,” 2023 11th International IEEE/EMBS Conference on Neural Engineering (NER), Baltimore, MD, USA, 2023, pp. 1-4.

-

M. M. Iskarous, H. H. Nguyen, L. E. Osborn, J. L. Betthauser, and N. V. Thakor, “Unsupervised learning and adaptive classification of neuromorphic tactile encoding of textures,” in 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Oct. 2018, pp. 1–4.

-

M. M. Iskarous, S. Sankar, Q. Li, C. L. Hunt and N. V. Thakor, "A scalable algorithm based on spike train distance to select stimulation patterns for sensory feedback," 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), 2021, pp. 297-300.

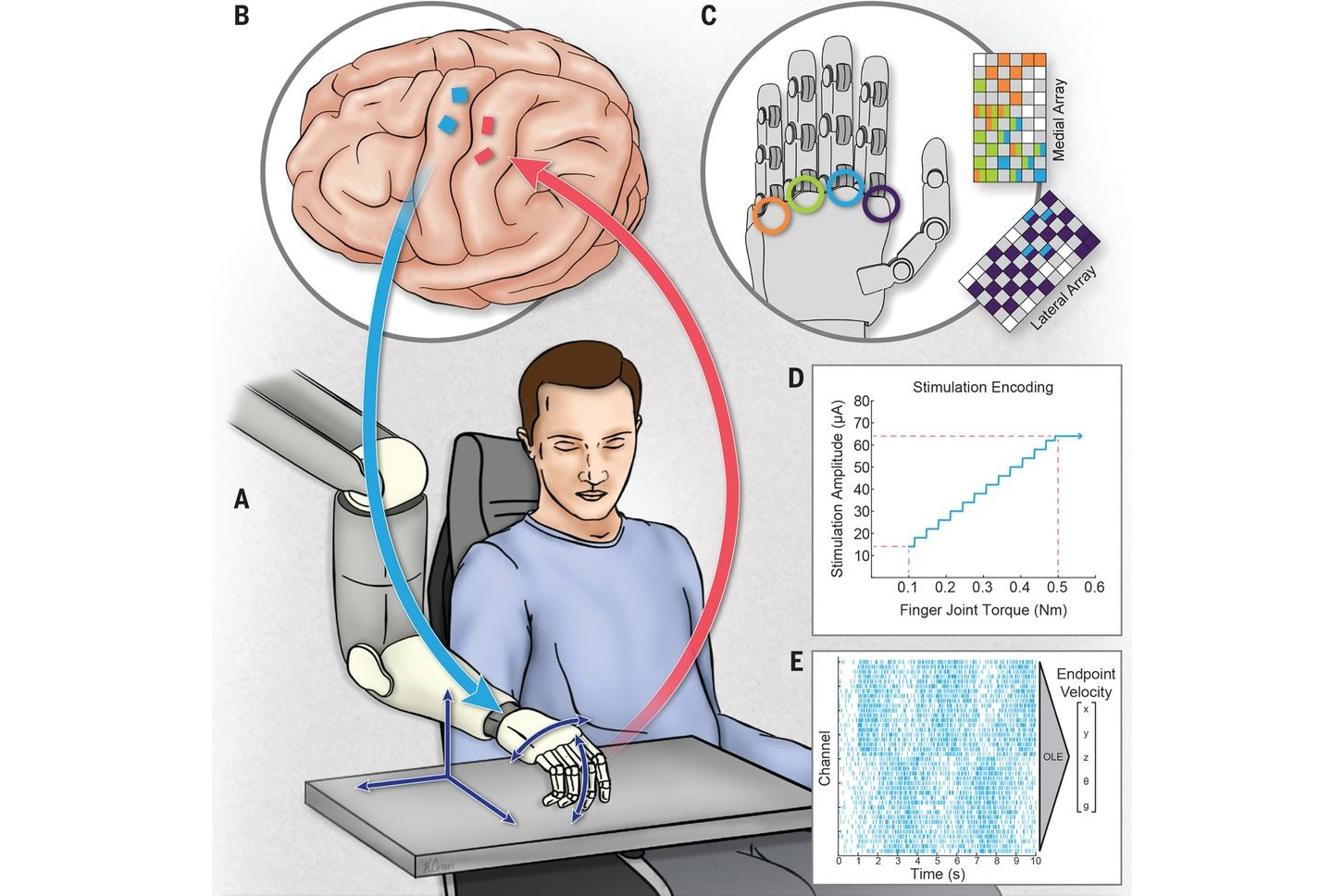

Sensorimotor Brain-Computer Interfaces

Lorem ipsum odor amet, consectetuer adipiscing elit. Non ut ex convallis hendrerit fames semper proin turpis. Eu leo quis montes vel adipiscing magnis accumsan tempus tortor. Vitae ante sagittis metus dis consequat laoreet. Arcu convallis ante, blandit eget himenaeos dignissim. Platea primis sollicitudin at torquent luctus habitasse. Sit potenti dolor imperdiet mus hac. Viverra tempus ante enim sollicitudin phasellus nibh. Platea at consequat condimentum ornare placerat porta.

Related publications

-

M. M. Iskarous, L. J. Endsley, L. E. Miller, J. L. Collinger, N. G. Hatsopolous, and J. E. Downey, “Decoding of coordinated hand and arm movements," in 11th International BCI Meeting, Banff, Canada, 2025.

-

M. M. Iskarous, A. Alamri, C. M. Greenspon, and J. E. Downey, “Multi-electrode ICMS in somatosensory cortex provides reliable tactile perception,” in 2025 Society for Neuroscience, San Diego, CA, 2025.

-

Y. Won, A. Emonds, M. M. Iskarous, C. M. Greenspon, and J. E. Downey, “Does motor cortex preferentially represent velocity or position during brain-computer interface control of the hand and the wrist?” in 2025 Society for Neuroscience, San Diego, CA, 2025.

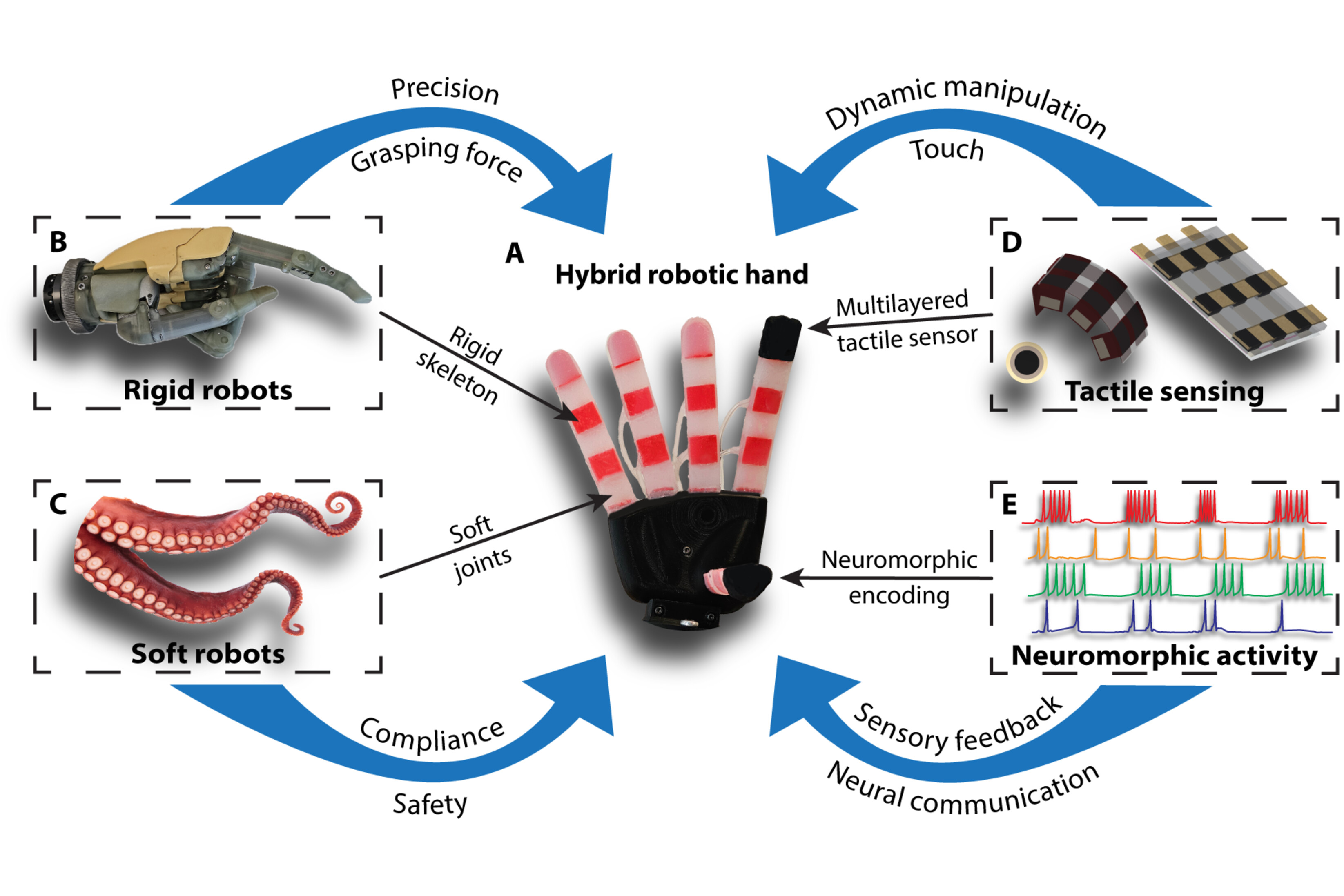

Advanced Models and Hardware for Artificial Tactile Sensing

These projects are collaborations to advance neuromorphic models of tactile sensing and to incorporate them into hardware. Sankar (2022) incorporates tactile sensors into a soft robotic finger to discriminate textures. Sankar (2025) creates a hybrid robotic hand with rigid and soft robotic components to improve grasp compliance while interacting with a large variety of objects. Rahiminejad (2021) designed an ASIC that produces SA and RA spiking response to analog tactile readings. Parvizi-Fard (2021) created a spiking neural network that models mechanoreceptors, the cuneate nucleus, and primary somatosensory cortex to classify edge orientations

Related publications

-

S. Sankar, W. Cheng, J. Zhang, A. Slepyan, M. M. Iskarous, R. Greene, R. DeBrabander, J. Chen, A. Gupta, and N. V. Thakor, “A natural biomimetic prosthetic hand with neuromorphic tactile sensing for precise and compliant grasping,” Science Advances, vol. 11, no. 10, p. eadr9300, Mar. 2025, doi: 10.1126/sciadv.adr9300.

-

A. Parvizi-Fard, M. Amiri, D. Kumar, M. M. Iskarous and N. V. Thakor, "A functional spiking neuronal network for tactile sensing pathway to process edge orientation," Scientific Reports, vol. 11, no. 1, pp. 1-16, Jan 2021, doi: 10.1038/s41598-020-80132-4.

-

E. Rahiminejad, A. Parvizi-Fard, M. M. Iskarous, N. V. Thakor, and M. Amiri, “A biomimetic circuit for electronic skin with application in hand prosthesis,” Transactions on Neural Systems and Rehabilitation Engineering, pp. 1-12, 2021.

-

S. Sankar, A. Slepyan, M. M. Iskarous, W. Cheng, R. DeBrabander, J. Zhang, A. Gupta, and N. V. Thakor, "Flexible multilayer tactile sensor on a soft robotic fingertip," 2022 IEEE Sensors, Dallas, TX, USA, 2022, pp. 1-4.

Interfaces and Devices to Improve Functional Outcomes of Neural Prostheses

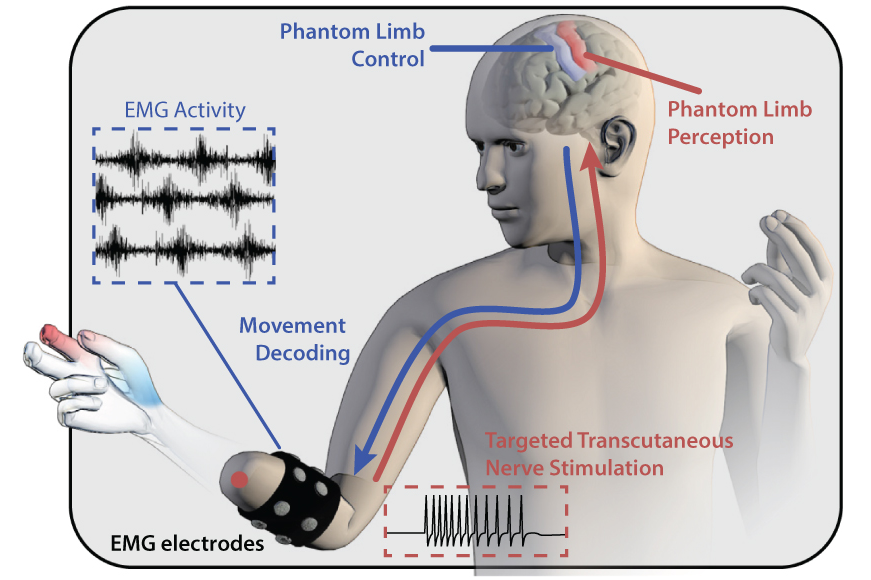

I am motivated to improve the functionality of prostheses through sensory feedback to help amputees in their daily lives. These projects were collaborations with other graduate students in my PhD lab and related to improving prosthesis outcomes with different approaches and goals. Osborn (2020) shows how sensory feedback through stimulation of peripheral nerves helps prosthesis users better sense their phantom limb and control the artificial limb. Tian (2022) uses my SA and RA model to generate a stimulation pattern to help a human subject in an object manipulation task. Hays (2019) shows the integration of both tactile and visual (camera-based) sensors to improve prosthesis outcomes through implementation of autonomous robotic control. Hunt (2018) demoed an augmented reality environment that incorporated tactile sensor readings and trajectory prediction to help prosthesis users more effectively learn to use their device. Altogether, these projects focus on incorporating different technologies and information to make prostheses more effective tools for their users.

Related publications

-

L. E. Osborn, M. A. Hays, R. Bose, A. Dragomir, M. M. Iskarous, Z. Tayeb, G. M. Levay, C. L. Hunt, M. S. Fifer, G. Cheng, A. Bezerianos, and N. V. Thakor, "Sensory stimulation enhances phantom limb perception and movement decoding," Journal of Neural Engineering, vol. 17, no. 5, pp. 056006, Oct. 2020, doi: 10.1088/1741-2552/abb861.

-

Y. Tian, A. Slepyan, M. M. Iskarous, S. Sankar, C. L. Hunt and N. V. Thakor, "Real-time, dynamic sensory feedback using neuromorphic tactile signals and transcutaneous electrical nerve stimulation," 2022 IEEE Biomedical Circuits and Systems Conference (BioCAS), Taipei, Taiwan, 2022, pp. 399-403.

-

M. Hays, L. Osborn, R. Ghosh, M. Iskarous, C. Hunt, and N. V. Thakor, “Neuromorphic vision and tactile fusion for upper limb prosthesis control,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), Mar. 2019, pp. 981–984.

-

C. L. Hunt, A. Sharma, M. M. Iskarous, and N. V. Thakor, “Live demonstration: Augmented reality prosthesis training with real-time hand trajectory prediction and neuromorphic tactile encoding,” in 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Oct. 2018, pp. 1–1.

© 2025 Mark Iskarous

Built by Bailey Kane.